Artificial intelligence has undoubtedly become one of the most popular technological advancements today. This has led to its experimentation in a variety of ways, with its integration into corporate environments being one of the main uses.

There are examples where companies have decided to implement artificial intelligence tools directly into their operations. In real-life contexts, this has led to drastic decision, such as eliminating up to 90% of their workforces in order to replace human personnel with automated systems.

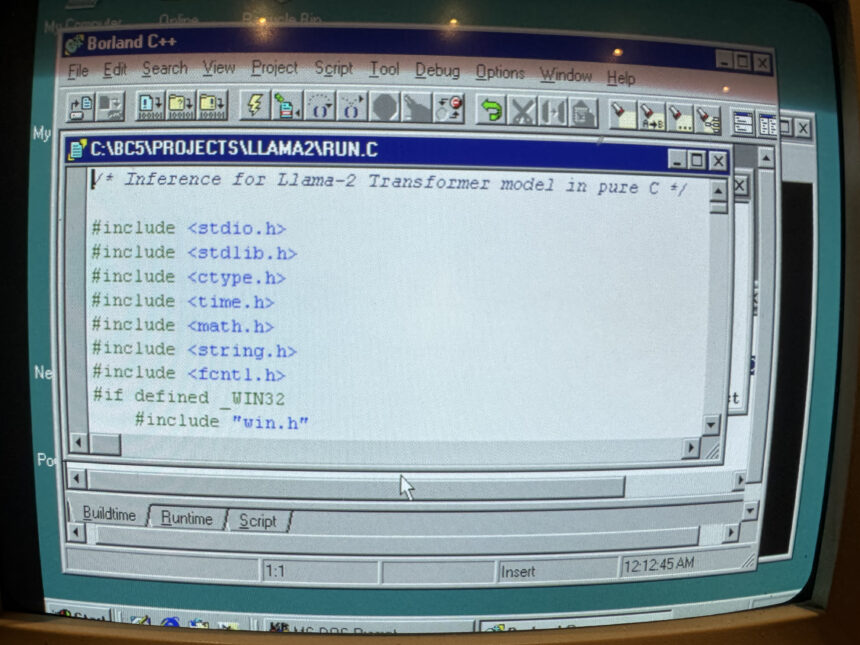

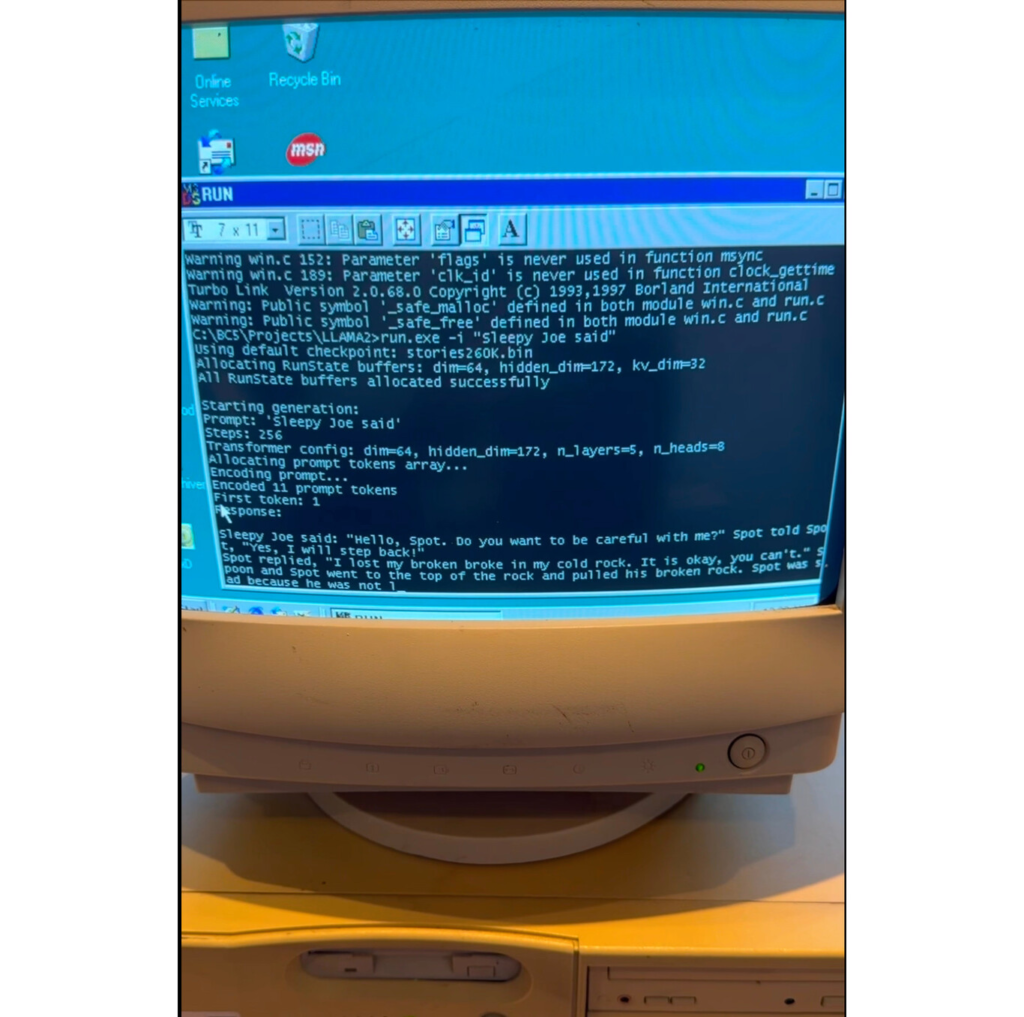

Now, a new experiment shows that language models can run on considerably older computers. According to TechSpot, a group of researchers managed to get a Llama 2-based model running on a Windows 98 PC, equipped with a 350 MHz Pentium II processor and 128 MB of RAM, originally released in May 1997.

LLM running on Windows 98 PC

26 year old hardware with Intel Pentium II CPU and 128MB RAM.

Uses llama98.c, our custom pure C inference engine based on @karpathy llama2.c

Code and DIY guide pic.twitter.com/pktC8hhvva

— EXO Labs (@exolabs) December 28, 2024The key component in this achievement was BitNet, an architecture that uses ternary weights, which drastically reduces the size of the models. Thanks to this, it is possible to run a 7 billion-parameter model with only 1.38 GB of storage. This allows it to be used on more affordable hardware optimised to run directly on the CPU, without the need for expensive graphics cards.

EXO explains that by prioritising CPU processing, this architecture can be up to 50% more efficient than full-precision models. A model with 100 billion parameters could even be run on a single CPU, at speeds approaching human readability.

This approach, the outlet notes, can be a valuable advance in increasing the accessibility of these types of technologies. By enabling advanced models to run on modest equipment, it opens up new possibilities for integrating artificial intelligence into more devices, products, and contexts around the world.